Take your pick of any flagship phone — iPhone, Samsung Galaxy, Google Pixel, hell, even OnePlus or Xiaomi — and it will have a great camera. Not just one great camera, but a system of lenses (on the back and front) capable of taking excellent ultra-wide shots, portrait-style photos of people and pets, macro close-ups, zoomed-in pics, incredible night images, and steady and stabilized high-resolution videos.

But smartphones didn’t always have such versatile cameras.

Smartphone photography and videography in the early days of the iPhone and, especially Android phones, were pretty rough. Phone cameras took low-res photos, couldn’t shoot in the dark, and it was still better to carry a point-and-shoot or DSLR for videos that didn’t look like potato quality.

Very quickly, however, the iPhone’s camera pulled ahead of every other smartphone, as social media apps encouraged everyone to capture more, and all the time. By 2009, two years after the iPhone launched, its camera rocketed to become the most popular camera on Flickr, blowing past popular Canon and Nikon DSLRs of the time. The iPhone’s closed platform (made and controlled by Apple, compared to Android’s open source and more diverse array of phones built by dozens of companies) meant each year-over-year model easily built on the previous one. Cameras on Android phones were basically the Wild West — the digital eye felt like it only mattered after displays, processing power, battery life, and design were prioritized.

But that would start to change on April 16, 2014 when Google made the Google Camera app available on the Play Store and inadvertently showed Android phone makers how to make a great camera for their touchscreen slabs.

“We never saw Apple talk about computational photography until after Google Cam.”

To Isaac Reynolds, the Group Product Manager for Google’s Pixel Camera, the release of the Google Camera app 10 years ago holds little significance to his work on bringing new camera features to new Pixel phones. Distributing the app was simply a few clicks on a computer. The work of building the best phone camera, one that could go beyond simulating over 50 years worth of sensor capabilities and image processing in traditional single-lens reflex (SLR) cameras, had already begun years earlier under Marc Levoy. The former Distinguished Google Engineer helped pioneer many of the computational photography techniques (the use of software to augment camera sensors and lenses to enable capture capabilities not possible with hardware alone) we see in our phones today when he and his team worked on image fusion for Google Glass and Google Nexus phones.

“I don’t remember [Google Camera] making waves across Android,” Reynolds tells Inverse during a sit-down interview at Google’s main headquarters in Mountain View, California in late March. “It was showing the world through our own phones, our own code, and our launches on our devices, what computational photography could do. We see a lot of meaning in this concept of small cameras, constrained environment, and ‘Let's use software to make up the difference.’”

From the inside, the Google Camera app might not have meant anything to Reynolds, but for the people who suffered with the lackluster cameras on Android phones, it was a savior of sorts. Installing the Google Camera app allowed Android owners in those days to get not just a prettier-designed app, but photos and video quality that were objectively better than what came installed on their devices.

“It was the first time we saw phone camera sensors were really being held back by software and how this one app could make a phone’s camera better,” says Max Weinbach, a technology analyst at the research firm Creative Strategies. “It was a big deal. For example, Samsung’s camera processing wasn’t great, but with Google Camera’s image fusion HDR+ processing, you could make the camera take better photos than anyone thought it was ever going to be. We never saw Apple talk about computational photography until after Google Cam. We never saw Samsung do it. No one realized until Google did it, but the community saw it.”

Ten years after the release of the Google Camera app in the Play Store, the impact that it had on the whole phone industry continues to be felt with every new smartphone. But computational photography, once a novel feature for phone camera excellence, is now a staple. Have we again reached a point of diminishing returns? Or will artificial intelligence and the shift from camera capture to creation take phone cameras to another level?

To find out, I went deep into Google’s strongly secured Pixel design lab to uncover how the company pushed smartphone photography past its limits, and how it hopes to do it all over again.

The Power of Software

Whether or not the release of the Google Camera app was really the catalyst that nudged Android phone makers to build better camera apps, it’s evident a decade later that the app revolutionized smartphone cameras. It wasn’t until Google bet its own-designed phones (the Nexus and then the Pixel) on computational photography that we saw major leaps in stills and videos.

Reynolds, who started at Google in 2014 and joined the Pixel Camera team in 2015, is a phone, camera, and photography nerd. When we met up in person, he immediately dove into camera geek-speak — a language I’m quite fluent in. “I got into tech, never thinking I would get to work on phones, let alone cameras.” He’s helped lead the design of the Pixel camera since before the original Pixel phone, and now runs a product management team that builds 14 to 15 cameras across the entire Pixel devices lineup (phones, tablets, and Chromebooks).

“We might now at this point be [buying] smart cameras that have phones attached.”

“There was a time when we were buying smartphones that had cameras on them,” Reynolds says. “I’m not sure that’s true anymore. I think by usage and how much time you spend using it, we might now at this point be [buying] smart cameras that have phones attached.”

Watch any keynote or read any press release announcing a new phone, and the cameras are typically the most detailed section. Phone makers tout more resolution (megapixels), larger sensors that can capture more light, additional lenses such as ultra-wides and telephotos (sometimes multiple ones for extra zoom), more advanced optical image stabilization modules, etc. New hardware components can aid in taking better-looking photos and videos by sheer brute force, but that’s not where Reynolds and his team think smartphone cameras should evolve towards. The reason why he loves working on phone cameras is because, at Google, they marry hardware and software techniques to go “beyond physics,” a motto that he borrowed from another Pixel team.

“We’re not trying to build a simulation,” Reynolds says, describing other phone cameras that rely mostly or solely on hardware to produce photos and videos. “If we’re trying to build a simulation, the best way to do that is to stick a piece of hardware in [a phone] because it comes with all of the tradeoffs. We said we don’t want those tradeoffs; we want to build something different; we don’t want to be stuck with the simulation; we want to go beyond the physics. And a lot of those solutions ended up making more sense in [combining] hardware with software.”

He points to portrait mode on Pixel phones as an example of how they’re not building a simulation of a camera lens. Whereas Apple has told me in the past how the bokeh (aka, how shallow the background is blurred) for the iPhone’s portrait mode honors traditional camera lenses by simulating how the blur looks based on equivalent SLR lens focal length, Reynolds confirms to me what everyone has suspected all along: The bokeh for Pixel portrait photos is supposed to look the way it does, like the Gaussian blur effect you can apply to images in an image editor like Photoshop. “It’s not perfect so we built it better. We built it without the limitations of a real lens.” How you feel about that is a matter of preference.

“We’re not trying to build a simulation.”

“Beyond physics means having all these capabilities with none of the weird quirks [of camera hardware alone],” he says, reminding me that a Pixel camera feature like Action Pan is a one-button-press to capture a photo of motion blur effect for a moving object such as a car. “If you’re just focused on the things that were solvable in hardware 50 years ago, you’re not going to build the next innovation. We're big on research, innovation, and technology, and solving customer problems and being helpful. We want to make things that act like they should, like people want them to, not like people have learned to tolerate [in cameras].”

There’s no debate that Google has left an everlasting mark on mobile cameras and helped advance the documentation of our lives — our memories — forward. The list of phone camera innovations is long. Highlights that have been copied by virtually every phone include HDR+ (a technique that merges multiple images into one shot that correctly exposes the bright and the dark areas), Super Res Zoom (a method for upscaling details in zoomed-in photos in lieu of having a physical telephoto zoom lens), and Night Sight (a ground-breaking mode that made it possible to capture low-light and night photos with visible detail).

“Night Sight was an absolute game-changer and it took folks years to even have an attempt at following it up,” Reynolds says. “I don’t think anyone’s matched the drama — the night to day — and vibrant colors because no one is willing to saturate their colors. You only saturate colors, if you're confident that your colors are correct. If you ever see a desaturated low-light photo, it’s an intentional choice because [the phone maker] is afraid their colors are wrong. We love the vibrancy because it demonstrates how capable that software package is.”

Google gets credit for popularizing night modes in phones, but it was actually Huawei that was the first to put night mode on a phone with the P20 Pro (before it was crippled by U.S. sanctions for a few years).

“Night Sight was built on Huawei work,” Myriam Joire, a former Engadget technology blogger tells Inverse. “Huawei did not get the first computational stuff, that was Google, but from then on [after Night Sight], they saw what Google was doing and were on it.”

Even the iPhone has leaned more into software to compensate for the compromises of physical lenses and camera sensors. Whereas portrait mode was originally exclusive to the iPhone 7 Plus and its dual-rear camera lenses, later iPhones including the iPhone XR and iPhone SE were endowed with portrait mode even with a single lens.

Leveraging software to handle some of the jobs of hardware also has another benefit “Software let Google keep costs down for a while,” says Weinbach, who extensively tests a range of smartphones available globally from budget to mid-range to premium to evaluate their value.

“We wanted to build a phone that was the best smartphone camera, but we knew we could do it at a better value,” Reynolds says. “[With software] we could do things on a $500 to $600 phone that folks couldn’t do on an $800 or $900 phone. That was an amazing achievement and that was because we blended hardware and software and AI. We could offer a ton at that price point.”

Solving Real Camera and Photography Problems

If software is the solution to filling in trade-offs that phone cameras have, what kind of camera-related problems do customers need solving? What’s left to improve now that photos and videos have reached an apex in terms of good enough quality for sharing either on social media or in full resolution? Has computational photography plateaued? As smartphones start to fill up with artificial intelligence features — be it generative or background-based machine learning — what is next for phone cameras other than incremental improvements to picture sharpness, dynamic range, low-light photography, and the like? Naturally, as a data-driven company, Google follows the data.

“We let the customers decide. We do an amazing amount of studies to figure out what they want in a camera,” Reynolds says. “I always have a stack rank of global research with a high number of respondents to help us understand what are the problems we’re solving.”

He says features like Magic Eraser, which uses AI to help delete photobombers or background distractions, came out of solving the problem of people sending their pictures to somebody they knew who could Photoshop them out. Rather than people spending time buying tools or watching YouTube videos on how to edit a photo, the Pixel team built those tools into Google phones and made them simpler and faster to figure out.

“Like zoom, low-light, dynamic range, sharpness, blur is one of those foundational issues [that everyone has],” Reynolds says. That’s why his team created Photo Unblur, a feature introduced on the Pixel 7 that can essentially turn a blurry photo that was perhaps the result of shaky hands or the subject moving (like children or animals) and make it sharper. “We want to build amazing technologies to solve problems. You don’t build amazing technologies by thinking incrementally. You have to think bigger and [take] big swings and big misses. You have to get comfortable with that.”

Solving these customer-requested camera pain points goes back to the philosophies of “beyond physics,” and not creating a simulation of a traditional SLR camera is what guides Reynolds and his team.

“If you’re just building an SLR, you’re never going to build Best Take and that’s a tragedy — that’s such a missed opportunity,” Reynolds says, referencing a newer Pixel feature that combines the smiling faces of multiple shots into one perfect photo. “Someone who is just keeping their blinders on would not build the best phone. We’re not really competing with SLRs. We’re competing with an entire workflow.”

AI and the Shift From Camera Capture to Creation

I would be lying if I said I wasn’t a little bit concerned with how phone makers like Google are challenging the very idea of what a photo is. Is a photo a realistic snapshot of time captured by a bunch of camera sensor photosites when the aperture of a lens opens up to let light hit it and then record that data as an image? Or is a photo supposed to represent how you remember that moment?

For example, let’s use Best Take. That final shot of a bunch of faces all perfectly smiling — no closed eyes or glances away from the camera — is how you remember the moment, but it might not have been what actually happened. Are we going down a slippery slope of creating false memories, especially as we get new AI-powered features like Best Take or Magic Editor, which go beyond erasing things with Magic Eraser? Google’s AI editing features straight up let you basically Photoshop an image by doing stuff like replacing the sky, or adding objects, or reframing subjects, or expanding what was actually captured.

It’s already somewhat of a philosophical question when we buy a particular phone because of the way its camera processes photos and video. On Pixel phones, the “dramatic look” with dark and contrasty shadows is by design because it’s “distinct and cool and fresh” compared to the look of captured content on iPhones and Samsung Galaxy phones, says Reynolds. That look will change over time with cultural trends, and Google has dialed back some of the dramatic aesthetic in favor of more highlights, but it’s still not a lifelike portrayal of reality.

“Your memories are your reality. What’s more real than your memory of it? If I showed you a photo that didn't match your memory, you’d say it wasn’t real.”

“[Starting with Pixel 6] you started to see a shift from image processing to image creation, image generation,” he says. “It’s a shift from, ‘Let’s make images less noisy and sharper to let’s recreate your memories’ because your memories are different from reality, and that’s okay. That’s a perception and human thing. Let’s build for humans.”

When I note the difference between capturing reality and memories, Reynolds defends camera creation versus capture. “Your memories are your reality. What’s more real than your memory of it? If I showed you a photo that didn't match your memory, you’d say it wasn’t real.”

I’m not saying Reynolds is wrong, but it’s a matter of where you stand on capture versus creation. He makes a good point that AI and the many generative capabilities it brings is a way to democratize editing for the average person.

“Back then, people were using edits to cover up bad cameras,” Reynolds says. “These days all phones — a basic picture at a bright window and a low-contrast scene — they all look pretty good. Now, people see editing as part of their creative process, how to make a mark. That's why we’re also going into editors because we recognize that putting it all directly in cameras is not ideal anymore. People want to be able to press the button themselves afterward to make their own decision, not because they'd be unsatisfied with having it straight out of camera, but because they're putting more of their life and their soul into it.”

In addition to testing a range of smartphone cameras, Weinbach, who has been tracking the advances in AI models and their applications across consumer products, is less convinced that AI-assisted editing and creation will have lasting appeal for phone cameras. “I don't think this generative AI ‘painting’ that we’re seeing with photos is really going to be a huge deal. It’s gonna be like the edit app on your phone. What else is inside that? It’s just making editing a little bit easier.”

“Computational photography is not dead.”

Even if new phones will be sold as having all kinds of new AI camera features, Reynolds gently claps back when I ask whether he thinks smartphone cameras and computational photography have peaked.

“Computational photography is not dead,” he says citing Zoom Enhance, a coming-soon feature for the Pixel 8 Pro that uses AI to enhance details in a photo when it’s zoomed-in on just like in an episode of CSI; and Video Boost, another exclusive feature for Pixel 8 Pro that uses AI and cloud processing to brighten videos after they’ve been recorded. “As the world advances, we want to bring those advances back to you.”

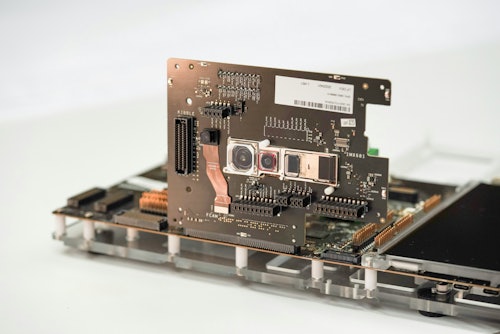

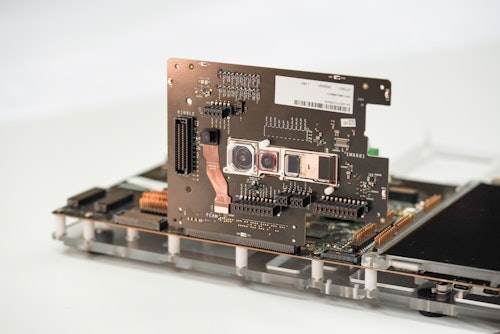

Having reviewed virtually every top-tier phone camera for over a decade, Joire echoes Reynolds’ view that computational photography still has a bright future ahead. Somehow, it always comes back to portrait mode. “Now we can machine learn and understand what’s in the background like cars and stuff, and teach the computer to get the edges around people perfectly.” Joire also suggests we could see new phones with even more camera lenses, similar to the ill-fated Light L16 phone that had 16 phone camera lenses and sensors mounted into its phone-like design and touted computational photography as a way to combine the images from all of them into a super photo of sorts with a focus point that could also be fully adjustable after capture. The L16 failed because chip performance was not as advanced in 2017 as it is in today’s phones, but it’s possible another company could roll the dice on that idea again.

There’s also a lot more non-computational photography work that can be made for phone cameras. Real Tone, an image processing feature introduced on the Pixel 6 that captures more accurate skin tones, especially for people of color, was a first step, but Reynolds says there’s more that can be done in terms of cameras showing healthier perceptions of ourselves.

“We have a responsibility to be a better mirror.”

“I know how much a photo on camera can affect how you think about yourself, how you see yourself. People don’t understand what they look like based on a mirror anymore. Nowadays, they understand what they look like based on the photos that they can share on Instagram or other social apps. We have a responsibility to be a better mirror. Authenticity and representation, and building with the community are super, super important when you have that level of power over a person. There are directions that we can go in the future that’s helping people have healthier views of themselves, but that’ll be a different thing [from Real Tone] which is about a real view.”

I am fully supportive of more inclusivity for phone cameras, but you have to admit there is some irony in the Pixel Camera team tackling the responsibility of making skin tones more true to life for a “real view” while at the same time promoting generative AI camera and editing features that alter reality to how you want to remember the moment.

Nearing the end of our interview, Reynolds wants to make clear that “AI is not a goal, it’s a means to an end” and his Pixel Camera team are using it to solve the “foundational problems” that people are having with their phone cameras. Right now, that means giving people editing capabilities in an easy-to-use way. But what he really wants to solve for, and hopes maybe AI could one day be able to figure out, is “timing.”

“There does not exist a moment in time or it is hard to create a moment in time that looks like what you wanted,” he says. “When you’re building a simulation, your gold standard is that you’ve captured the moment in time that no one wanted. That’s a limitation. We would rather produce the moment in time that you wanted and remembered and was real.”

Today, the Google Camera app (renamed to Pixel Camera last fall) is no longer installable on any non-Pixel phone. It’s available in the Google Play Store as an app that can be updated with features by the Pixel Camera team for Google’s phones. There are a number of good reasons for this — mainly because the camera app is optimized for Google’s homegrown Tensor chips used in its Pixel devices and not for the Qualcomm Snapdragon, MediaTek, or other chipsets used by other Android phones. That loss may have no effect on Reynolds and his team working on improving the cameras on existing Pixel devices and on future, unannounced hardware, but for the Android community, it’s the end of an era. There’s still a niche mod scene that continues to hack past Google Camera app releases to work with non-Pixel phones, but the reality is most phone cameras have caught up or surpassed Google on computational photography.

Inadvertently, the Google Camera forced Android phone makers to invest more into making great cameras — and it worked.

FTTT

0 Comments